October 28, 2019 ( last updated : October 27, 2019 )

MongoDB Sharded Cluster

MongoDB

https://github.com/gridgentoo/osquery

Sharding is a MongoDB process to store data-set across different machines. It allows you to perform a horizontal scale of data and to partition all data across independent instances. Sharding allows you to add more machines based on data growth to your stack.

Let's make it simple. When you have collections of music, 'Sharding' will save and keep your music collections in different folders on different instances or replica sets while 'Replication' is just syncing your music collections to other instances.

Shard - Used to store all data. And in a production environment, each shard is replica sets. Provides high-availability and data consistency.

Config Server - Used to store cluster metadata, and contains a mapping of cluster data set and shards. This data is used by mongos/query server to deliver operations. It's recommended to use more than 3 instances in production.

Mongos/Query Router Mongos/Query Router - This is just mongo instances running as application interfaces. The application will make requests to the 'mongos' instance, and then 'mongos' will deliver the requests using shard key to the shards replica sets.

In this tutorial, we will disable SELinux. Change SELinux configuration from 'enforcing' to 'disabled'.

Connect to all nodes through OpenSSH.

ssh root@SERVERIPDisable SELinux by editing the configuration file.

vim /etc/sysconfig/selinuxChange SELINUX value to 'disabled'.

SELINUX=disabledSave and exit.

Next, edit the hosts file on each server.

vim /etc/hostsPaste the following hosts configuration:

10.0.15.31 configsvr1

10.0.15.32 configsvr2

10.0.15.11 mongos

10.0.15.21 shardsvr1

10.0.15.22 shardsvr2

10.0.15.23 shardsvr3

10.0.15.24 shardsvr4Save and exit.

Now restart all servers using the reboot command.

rebootWe will use the latest MongoDB version (3.4) for all instances. Add new MongoDB repository by executing the following command:

cat <<'EOF' >> /etc/yum.repos.d/mongodb.repo

[mongodb-org-3.4]

name=MongoDB Repository

baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/3.4/x86_64/

gpgcheck=1

enabled=1

gpgkey=https://www.mongodb.org/static/pgp/server-3.4.asc

EOFNow install mongodb 3.4 from mongodb repository using the yum command below.

sudo yum -y install mongodb-orgAfter mongodb is installed, use 'mongo' or 'mongod' command in the following way to check version details.

mongod --version

In the 'prerequisites' section, we've already defined config server with 2 machines 'configsvr1' and 'configsvr2'. And in this step, we will configure it to be a replica set.

If there is a mongod service running on the server, stop it using the systemctl command.

systemctl stop mongoEdit the default mongodb configuration 'mongod.conf' using the Vim editor.

vim /etc/mongod.confChange the DB storage path to your own directory. We will use '/data/db1' for the first server, and '/data/db2' directory for the second config server.

storage:

dbPath: /data/db1Change the value of the line 'bindIP' to your internal network addres - 'configsvr1' with IP address 10.0.15.31, and the second server with 10.0.15.32.

bindIP: 10.0.15.31On the replication section, set a replication name.

replication:

replSetName: "replconfig01"And under sharding section, define a role of the instances. We will use these two instances as 'configsvr'.

sharding:

clusterRole: configsvrSave and exit.

Next, we must create a new directory for MongoDB data, and then change the owner of that directory to 'mongod' user.

mkdir -p /data/db1

chown -R mongod:mongod /data/db1After this, start the mongod service with the command below.

mongod --config /etc/mongod.confYou can use the netstat command to check whether or not the mongod service is running on port 27017.

netstat -plntu

Configsvr1 and Configsvr2 are ready for the replica set. Connect to the 'configsvr1' server and access the mongo shell.

ssh root@configsvr1

mongo --host configsvr1 --port 27017Initiate the replica set name with all configsvr member using the query below.

rs.initiate(

{

_id: "replconfig01",

configsvr: true,

members: [

{ _id : 0, host : "configsvr1:27017" },

{ _id : 1, host : "configsvr2:27017" }

]

}

)If you get a results '{ "ok" : 1 }', it means the configsvr is already configured with replica set.

and you will be able to see which node is master and which node is secondary.

rs.isMaster()

rs.status()

The configuration of Config Server Replica Set is done.

In this step, we will configure 4 'centos 7' servers as 'Shard' server with 2 'Replica Set'.

Connect to each server, stop the mongod service (If there is service running), and edit the MongoDB configuration file.

systemctl stop mongod

vim /etc/mongod.confChange the default storage to your specific directory.

storage:

dbPath: /data/db1On the 'bindIP' line, change the value to use your internal network address.

bindIP: 10.0.15.21On the replication section, you can use 'shardreplica01'' for the first and second instances. And use 'shardreplica02' for the third and fourth shard servers.

replication:

replSetName: "shardreplica01"Next, define the role of the server. We will use all this as shardsvr instances.

sharding:

clusterRole: shardsvrSave and exit.

Now, create a new directory for MongoDB data.

mkdir -p /data/db1

chown -R mongod:mongod /data/db1Start the mongod service.

mongod --config /etc/mongod.confCheck MongoDB is running using the following command:

netstat -plntuYou will see MongoDB is running on the local network address.

Next, create a new replica set for these 2 shard instances. Connect to the 'shardsvr1' and access the mongo shell.

ssh root@shardsvr1

mongo --host shardsvr1 --port 27017Initiate the replica set with the name 'shardreplica01', and the members are 'shardsvr1' and 'shardsvr2'.

rs.initiate(

{

_id : "shardreplica01",

members: [

{ _id : 0, host : "shardsvr1:27017" },

{ _id : 1, host : "shardsvr2:27017" }

]

}

)If there is no error, you will see results as below.

Results from shardsvr3 and shardsvr4 with replica set name 'shardreplica02'.

Redo this step for shardsvr3 and shardsvr4 servers with different replica set name 'shardreplica02'.

Now we've created 2 replica sets - 'shardreplica01' and 'shardreplica02' - as the shard.

The 'Query Router' or mongos is just instances that run 'mongos'. You can run mongos with the configuration file, or run with just a command line.

Login to the mongos server and stop the MongoDB service.

ssh root@mongos

systemctl stop mongodRun mongos with the command line as shown below.

mongos --configdb "replconfig01/configsvr1:27017,configsvr2:27017"Use the '--configdb' option to define the config server. If you are on production, use at least 3 config servers.

You should see results similar to the following.

Successfully connected to configsvr1:27017

Successfully connected to configsvr2:27017mongos instances are running.

Open another shell from the previous step, connect to the mongos server again, and access the mongo shell.

ssh root@mongos

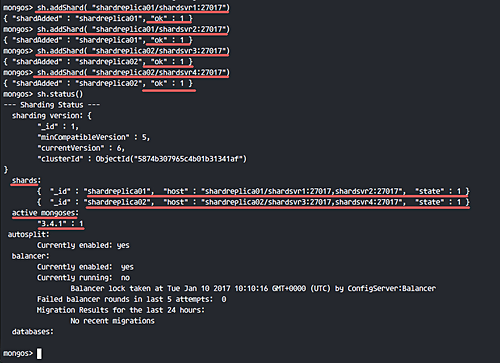

mongo --host mongos --port 27017Add shard server with the sh mongodb query.

For 'shardreplica01' instances:

sh.addShard( "shardreplica01/shardsvr1:27017")

sh.addShard( "shardreplica01/shardsvr2:27017")For 'shardreplica02' instances:

sh.addShard( "shardreplica02/shardsvr3:27017")

sh.addShard( "shardreplica02/shardsvr4:27017")Make sure there is no error and check the shard status.

sh.status()You will see sharding status similar to the way what the following screenshot shows.

We have 2 shard replica set and 1 mongos instance running on our stack.

To test the setup, access the mongos server mongo shell.

ssh root@mongos

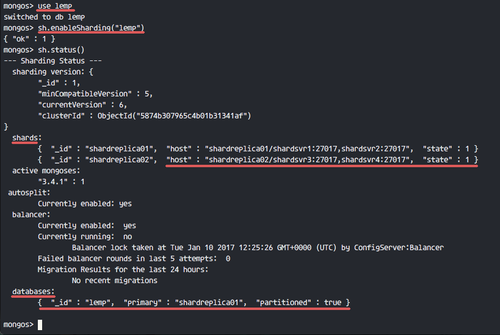

mongo --host mongos --port 27017Enable Sharding for a Database

Create a new database and enable sharding for the new database.

use lemp

sh.enableSharding("lemp")

sh.status()

Now see the status of the database, it's has been partitioned to the replica set 'shardreplica01'.

Enable Sharding for Collections

Next, add new collections to the database with sharding support. We will add new collection named 'stack' with shard collection 'name', and then see database and collections status.

sh.shardCollection("lemp.stack", {"name":1})

sh.status()

New collections 'stack' with shard collection 'name' has been added.

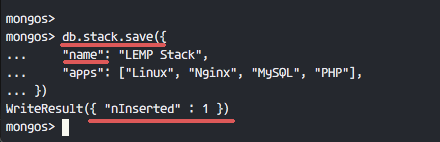

Add documents to the collections 'stack'.

Now insert the documents to the collections. When we add documents to the collection on sharded cluster, we must include the 'shard key'.

In the example below, we are using shard key 'name', as we added when enabling sharding for collections.

db.stack.save({

"name": "LEMP Stack",

"apps": ["Linux", "Nginx", "MySQL", "PHP"],

})As shown in the following screenshots, documents have been successfully added to the collection.

If you want to test the database, you can connect to the replica set 'shardreplica01' PRIMARY server and open the mongo shell. I'm logging in to the 'shardsvr2' PRIMARY server.

ssh root@shardsvr2

mongo --host shardsvr2 --port 27017Check database available on the replica set.

show dbs

use lemp

db.stack.find()You will see that the database, collections, and documents are available in the replica set.

MongoDB Sharded Cluster on CentOS 7 has been successfully installed and deployed

#############################################################################################Reference

Originally published October 28, 2019

Latest update October 27, 2019

Related posts :